Tutorial 4: Describing data

Describing data with R

Week of September 23, 2024

(4th week of classes)

How the tutorials work

The DEADLINE for your report is always at the end of the tutorial period.

REPORT INSTRUCTIONS: In addition to the problem at the end of the tutorial, there may be questions along this tutorial that need to be answered in the report (in the RStudio file).

While students may eventually be able to complete their reports without attending the synchronous lab/tutorial sessions, we strongly recommend that you participate. Please note that your TA is not responsible for providing assistance with lab reports outside of the scheduled synchronous sessions.

Your TAs

Section 0101 (Tuesday): 13:15-16:00 - Aliénor Stahl (a.stahl67@gmail.com)

Section 0103 (Thursday): 13:15-16:00 - Alexandra Engler (alexandra.engler@hotmail.fr)

General Information

Please read all the text thoroughly; do not skip any sections. The tutorials are centered around R exercises designed to help you practice running statistical analyses and interpreting the results.

Note: Once you get used to R, things get much easier. Since this is only your 3rd in-person tutorial, try to remember to:

- set the working directory

- create a RStudio file

- enter commands as you work through the tutorial

- save the file (from time to time to avoid loosing it) and

- run the commands in the file to make sure they work.

- if you want to comment the code, make sure to start text with: # e.g., # this code is to calculate…

General setup for the tutorial

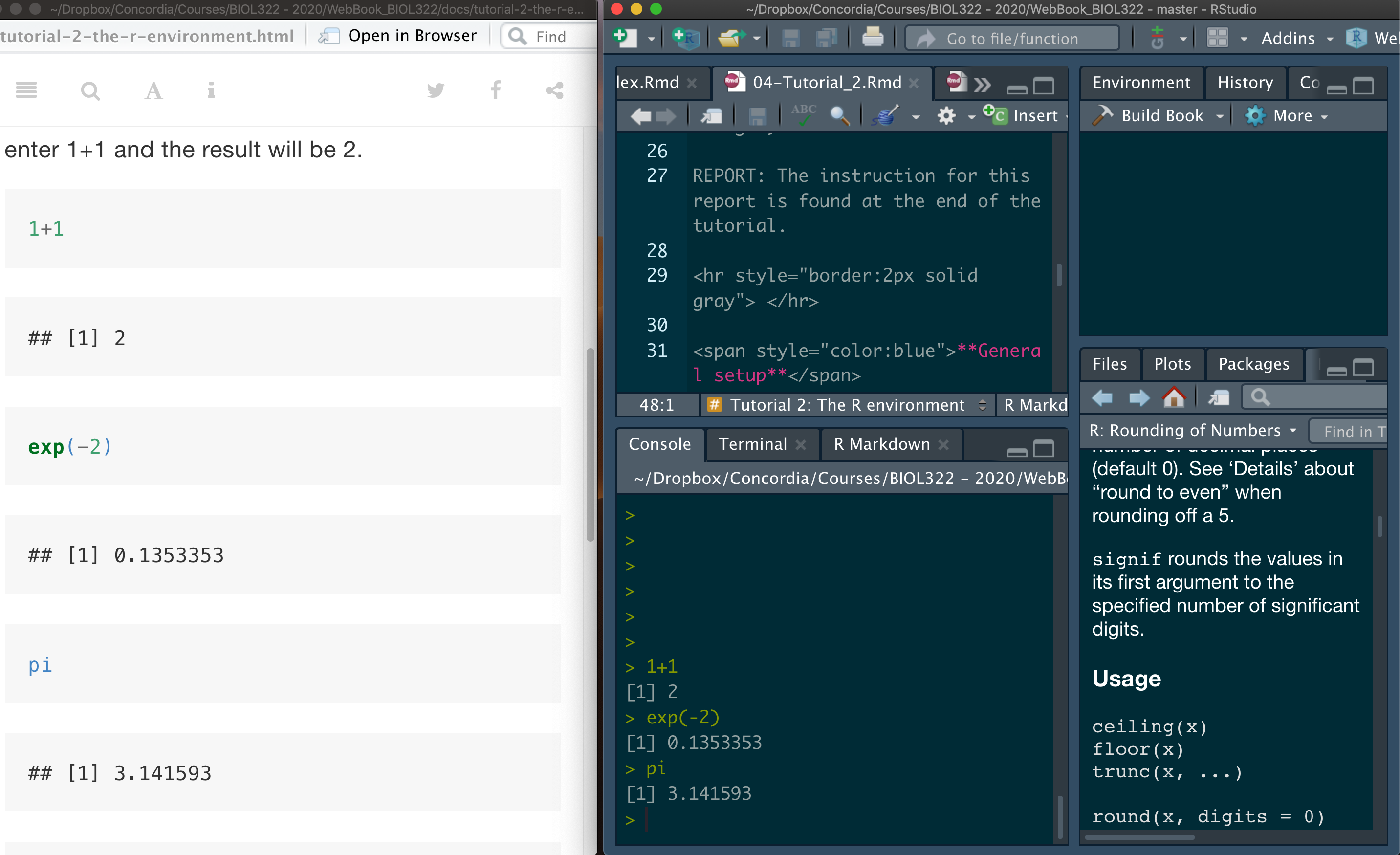

An effective approach is to have the tutorial open in our WebBook alongside RStudio.

Mean, median, variance, standard deviation & coefficient of variation

We will begin the tutorial by working with the data on gliding snakes, which was introduced in Lecture 4.

Download the gliding snakes data file

First open the file “chap03e1GlidingSnakes.csv” in excel and try to understand how the data are organized.

Now, upload and inspect the data. By now, you’ve probably noticed that the View function allows you to see the entire dataset, including the variable names (i.e., the column names of the data matrix). The head function, which prints the first six rows, is useful for identifying variable names if you’ve forgotten them. Knowing these names is crucial, as you’ll frequently refer to them during analysis. It’s common to forget variable names, even the ones you’ve created.

Additionally, you can use the function names to print just the variable names (i.e., the column names of the data matrix).

snakeData <- read.csv("chap03e1GlidingSnakes.csv")

View(snakeData)

head(snakeData)

names(snakeData)As you learned in Tutorial 3, data matrices are typically organized with variables as columns. In this dataset, the variable snake represents the identifier for each observational unit (i.e., individual snake), while undulationRateHz contains the speed (cycles per second) for each snake. There are various ways to access individual variables in a matrix, but the simplest method (as covered in Tutorial 3) is to use the $ sign. In this approach, the name of the data matrix comes before the $, and the name of the variable of interest follows it.

Calculate location statistics:

mean(snakeData$undulationRateHz)

median(snakeData$undulationRateHz)You can also save the values into a variable for later use:

mean.undulation <- mean(snakeData$undulationRateHz)

mean.undulation

median.undulation <- median(snakeData$undulationRateHz)

median.undulationCalculate spread (often referred as dispersion) statistics - the function sd stands for standard deviation and the function var for variance.

sd(snakeData$undulationRateHz)

var(snakeData$undulationRateHz)Coefficient of Variation - R doesn’t have a built-in function for calculating the coefficient of variation, but we can easily compute it with the following method:

sd(snakeData$undulationRateHz)/mean(snakeData$undulationRateHz) * 100We can also calculate the coefficient of variation (CV) as follows:

sd.undulation <- sd(snakeData$undulationRateHz)

sd.undulation/mean.undulation * 100Obviously you can also save CV into a variable as follows:

CV.undulation <- sd.undulation/mean.undulation * 100Let’s plot the frequency distribution of the data (i.e., histogram):

hist(snakeData$undulationRateHz)Making the histogram looks better:

hist(snakeData$undulationRateHz, breaks = seq(0.8,2.2,by=0.2), col = "firebrick",xlab="Undulation rate (Hz)",main="")You could have used the argument main to add a title to the graph, which would appear at the top. However, in reports and publications, it’s common to use legends instead of titles, so we left it empty by setting main=“” (i.e., nothing between the quotes).

Notice that the X and Y axes are not touching. By setting the xaxs and yaxs arguments to “i” for the X and Y axes, respectively, we can make them touch. The “i” stands for ‘internal’ as opposed to the default “r” for ‘regular.’ We call it ‘internal’ because it adjusts the axes to a non-standard configuration. Keep in mind, R has its fair share of idiosyncrasies—just like many other languages, both natural and programming. As I often say: ’Don’t fight the language, embrace it!

hist(snakeData$undulationRateHz, breaks = seq(0.8,2.2,by=0.2), col = "firebrick",xlab="Undulation rate (Hz)",main="",xaxs = "i",yaxs="i")Boxplots & interquartile range

Let’s use a different data set, the spider running speed data set (as seen in lecture 5).

Download the running spider data file

First open the file “chap03e2SpiderAmputation.csv” in excel and try to understand how the data are organized.

Upload and inspect the data:

spiderData <- read.csv("chap03e2SpiderAmputation.csv")

View(spiderData)

head(spiderData)

names(spiderData)Notice below that we’ve changed the way we reference variables. Instead of using the $ sign, some functions, like boxplot, allow you to refer to variables directly. These functions recognize variable names within the data matrix through the argument called data, making it easier to type and simplifying the code.

boxplot(speed ~ treatment, data = spiderData)Obviously we could have used $ instead of simply using the argument data in the function above. It would seem a little bit of more work to type though:

boxplot(spiderData$speed ~ spiderData$treatment)What is the problem with this boxplot? The issue is that the after speeds appear before the before speeds. This is because these variables are not being treated as numerical but rather as strings (i.e., a sequence of characters) and as such the letter a comes before the letter b in the alphabet. In this case, we need to transform the variable treatment into a numerical code (referred as to factor in R) in which we can also change the order of their appearance. This is done by using the function factor. This function is quite useful and we’ll use it in several types of analyses and tutorials in BIOL322:

spiderData$treatment <- factor(spiderData$treatment, levels = c("before", "after"))Now plot the histogram again; now before will appear before after; Ok, writing that was pretty circular but true!

boxplot(speed ~ treatment, data = spiderData)Now, let’s make the boxplot look much nicer. It’s easy to forget the arguments for R functions, including the ones used in boxplot. So, here is some additional information that you will be able to use in the future to make boxplot nicer than the ones produced by the default function boxplot; argument boxwex controls the width of the boxes so that we can make them narrower; here boxwex was set to 0.5 but “play” with the value to see what happens.

The argument whisklty sets the type of line for the whiskers - setting it to 1 the whiskers appear as solid lines (and not as a dahsed line as in the default, i.e., in the way was generated in the earlier boxplot). Finally, argument las makes the labels of axes appear in different directions - try las=0, las=1 and las=2 to see what happens (try in class but no need to include in the report). Here we used las=1 (needed to be included in the report):

boxplot(speed ~ treatment, data = spiderData, ylim = c(0,max(spiderData$speed)),col = "goldenrod1", boxwex = 0.5, whisklty = 1, las = 1,xlab = "Amputation treatment", ylab = "Running speed (cm/s)")By simply observing the boxplot, you can see that the distributions of before and after speeds are asymmetric. Refer to our lecture slides to determine whether each variable represents a right or left skewed distribution. Include this information in your tutorial report code file as shown below:

# before amputation the distribution type is "YOUR ANSWER" and after amputation the distribution type is "YOUR ANSWER".Don’t forget to use the symbol # so that the text you wrote will not be interpreted as commands.

Because the data are quite asymmetric, we will use location and spread statistics based on median and quartiles, respectively, instead of mean and standard deviations. Here we have two groups in the data, i.e., before and after. In tutorial 3, we introduced the function aggregate. This function selects data belonging to a similar categorical value in a list (here treatment, i.e., before or after) and applies a function on the basis of these values separately. Here we will use the median per treatment, hence median as the third argument in the function aggregate. The median is then calculated for each group as:

aggregate(spiderData$speed, list(spiderData$treatment), median)The use of the function aggregate with the function quantile is a bit “complex” (see below). To make it simpler, let’s create variables that contain the speed values before and after voluntary amputation separately. This is done using the function subset and setting its argument treatment as the name of the code used for each individual spider. Note that here, the argument treatment received a “==” instead of a “=”. Note that the double equal sign “==” is needed when using a logical statement indicating “is equal to” in R:

speedBefore <- subset(spiderData, treatment == "before")

speedBefore

speedAfter <- subset(spiderData, treatment == "after")

speedAfterNow let’s calculate the median, 25% (Q1), and 75% (Q3) quartiles using the quantile function. In this function, we specify the values at which quartiles are calculated using the prob argument. The term ‘prob’ (short for probability) is used here because we are inferring that there is a 25% probability of finding a value equal to or smaller than Q1 in the entire statistical population of spiders (i.e., all male spiders of that species during the mating season, either before or after amputation). Similarly, there is a 75% probability of finding a value equal to or smaller than Q3. Keep in mind that ‘chance’ here refers to the statistical concept of population inference, where sample values are used to estimate population parameters. Thus, we use the sample quartiles (Q1, Q2, and Q3) to make inferences about two populations: all male spiders of that species during mating season either before amputation (population 1) or after amputation (population 2).

median(speedBefore$speed)

median(speedAfter$speed)

quantile(speedBefore$speed, probs = c(0.25, 0.75))

quantile(speedAfter$speed, probs = c(0.25, 0.75))Note that the median Q2 of a distribution represents the value that divides the distribution of values into half; i.e., there is a 50% chance of finding a value equal or smaller than Q2; and a 50% chance of finding a value equal or greater than Q2 (i.e., prob for Q2 = 0.50); so that we could have calculated Q1, Q2 and Q3 just using the function quantile as follows:

quantile(speedBefore$speed, probs = c(0.25, 0.50, 0.75))

quantile(speedAfter$speed, probs = c(0.25, 0.50, 0.75))The interquartile range (IQR) for both speeds (before or after) can be calculated using the function IQR as follows:

IQR(speedBefore$speed)

IQR(speedAfter$speed)Remember that IQR = Q3 - Q1; so we could have equally calculated IQR as follows:

quantile(speedBefore$speed, probs = c(0.75))-quantile(speedBefore$speed, probs = c(0.25))

quantile(speedAfter$speed, probs = c(0.75))-quantile(speedAfter$speed, probs = c(0.25))Learning and practicing R further using the stickleback lateral plates data

Let’s practice the concepts of location and spread statistics using a different dataset. Working with varied types of data helps deepen your understanding of data structure and how R handles different datasets. For this exercise, we’ll use the stickleback lateral plates dataset from Lecture 6.

Download the stickleback data file

First open the file “chap03e3SticklebackPlates.csv” in excel and try to understand how the data are organized.

Upload and inspect the data:

sticklebackData <- read.csv("chap03e3SticklebackPlates.csv")

View(sticklebackData)

head(sticklebackData)Next, calculate the mean for each genotype. As in real languages, theare are many ways to say something, including words that are synonyms. Instead of the function aggregate, here we will calculate IQR using the function tapply instead; we will also learn how to round values using the function round:

meanPlates <- tapply(sticklebackData$plates, INDEX = sticklebackData$genotype,FUN = mean)

meanPlates <- round(meanPlates, digits = 1)

meanPlatesCalculate the median for each group:

medianPlates <- tapply(sticklebackData$plates, INDEX = sticklebackData$genotype,FUN = median)

medianPlates <-round(medianPlates, digits = 1)

medianPlatesAnd the standard deviations for each group:

sdPlates <- tapply(sticklebackData$plates, INDEX = sticklebackData$genotype, FUN = sd)

sdPlates <- round(sdPlates, 1)

sdPlatesThe interquartile range by group:

iqrPlates <- tapply(sticklebackData$plates, INDEX = sticklebackData$genotype,FUN = IQR, type = 5)

iqrPlatesLet’s learn something new. Let’s produce a table containing the number of individuals for each genotype. Here we will use the function table as follows:

sticklebackFreq <- table(sticklebackData$genotype)

sticklebackFreqThe total number of individuals independent of the genotype can be calculated in several ways in R but here we can simply:

sum(sticklebackFreq)The relative frequency of each genotype is given by:

sticklebackFreq/sum(sticklebackFreq)Finally, let’s create a boxplot. Notice how we can easily reuse the code from the first boxplot for the spider data—it’s mostly a matter of copying, pasting, and updating the variable names. This kind of code reusability is common in R, so keeping your scripts well-organized and clearly documented makes the process much more efficient—often, that’s ‘half the job done’ in R!

boxplot(plates ~ genotype, data = sticklebackData, ylim = c(0,max(sticklebackData$plates)), col = "goldenrod1", boxwex = 0.5, whisklty = 1, las = 1, xlab = "Genotype", ylab = "Number of plates")Miscellanea - Some advanced notes

If you are curious about developing stronger R skills, this will help you to practice it further. That said, you won’t need to use these functions in your reports.

Calculate Q1, Q2 and Q3 using the function aggregate for both speeds at the same time. The argument function (x) is used because the function quantile has probs as an argument.

aggregate(spiderData$speed, list(spiderData$treatment), function (x) quantile(x, probs = c(0.25, 0.50, 0.75)))Note that the above is a little more complex. IQR could had been equally calculated for both speeds using aggregate in the usual way we learned how to use the function aggregate. Because we are not using arguments for the function IQR as we needed for the function quantile, we don’t need to use the argument function (x) in aggregate.

aggregate(spiderData$speed, list(spiderData$treatment),IQR)We could also have calculated IQR using the function tapply (as seen earlier) as follows:

tapply(spiderData$speed, INDEX = spiderData$treatment, FUN = IQR)and the more complicated usage of the function quantile as follows given that quantile requires the argument probs.

tapply(spiderData$speed, INDEX = spiderData$treatment, function(x) FUN = quantile(x, probs = c(0.25, 0.50, 0.75)))Why are there so many different methods? Just like in natural languages, where we choose different expressions based on context—such as saying ‘I have a problem,’ ‘I have an issue,’ or ‘I have a dilemma’—the choice of functions in R can vary depending on the situation. For example, the tapply and aggregate functions were both used here to perform the same calculations as before, but each has unique capabilities that make it more suitable for certain tasks depending on the circumstances.

ACADEMIC INTEGRITY & AVOIDING ACADEMIC MISCONDUCT. We hold the principles of academic integrity, that is, honesty, responsibility and fairness in all aspects of academic life, at their highest values.

Some of our tutorials take years to perfect and are carefully designed to enhance your understanding of Biostatistics. While some reports may resemble those from previous years, you are not allowed to consult reports from current or past students. This would be an academic infraction. We have ways to detect similarities between reports. Academic infractions can apply not only to you but also to the students who shared their reports, even after they have graduated. Academic misconduct can result in permanent changes to a student’s record, reflecting violations of academic integrity.

Moreover, using previous reports shows a lack of respect for the effort and dedication of professors and instructors.

Report instructions

Describe a fictional biological problem, such as an experiment or observational study you designed, involving one quantitative variable. The observational units should be divided into two categories (e.g., male/female, genotype, freshwater/marine water, low/high pH, or any other categorical variable with two groups). Create a dataset by inventing numbers relevant to your problem, and save it in a CSV file. You can use Excel to generate the data and then save the file in CSV format.

Next, write R code to read the data file and calculate the mean, median, standard deviation, variance, and interquartile range for each category. Additionally, generate a boxplot to visualize the data. Use # to add comments describing your fictional problem within the code. For example:

# I set up a study that .....

# use as many lines as necessary,

# always starting with #

Code here:We have extensive experience with tutorials, and they are structured to ensure that students can complete them by the end of the session. Please submit both the RStudio file and the relevant CSV file through Moodle. A written report (e.g., a Word document) is not required (just the R file with your code as explained above).