Tutorial 6: Sample estimators

Biases and corrections in the estimation of sample variances, sample standard deviations, and confidence intervals

Week of October 7, 2024

(6th week of classes)

How the tutorials work

The DEADLINE for your report is always at the end of the tutorial.

The INSTRUCTIONS for this report is found at the end of the tutorial.

Students may be able after a while to complete their reports without attending the synchronous lab/tutorial sessions. That said, we highly recommend that you attend the tutorials as your TA is not responsible for assisting you with lab reports outside of the lab synchronous sections.

The REPORT INSTRUCTIONS (what you need to do to get the marks for this report) is found at the end of this tutorial.

Your TAs

Section 0101 (Tuesday): 13:15-16:00 - Aliénor Stahl (a.stahl67@gmail.com)

Section 0103 (Thursday): 13:15-16:00 - Alexandra Engler (alexandra.engler@hotmail.fr)

General Information

Please read all the text; don’t skip anything. Tutorials are based on R exercises that are intended to make you practice running statistical analyses and interpret statistical analyses and results.

Note: At this point you are probably noticing that R is becoming easier to use. Try to remember to:

- set the working directory

- create a RStudio file

- enter commands as you work through the tutorial

- save the file (from time to time to avoid loosing it) and

- run the commands in the file to make sure they work.

- if you want to comment the code, make sure to start text with: # e.g., # this code is to calculate…

General setup for the tutorial

A good way to work through is to have the tutorial opened in our WebBook and RStudio opened beside each other.

This tutorial is based on lecture 11, which, for some lab sections, it takes place afterwards (i.e., this coming Thursday). This poses no issues as the the lecture and tutorial are quite interrelated and the issues covered in lecture 11 are extensively covered in this tutorial as well

Demonstrating the importance of sample corrections and degrees of freedom

One of the challenging concepts in statistics is understanding degrees of freedom and why corrections are needed to ensure sample estimates are accurate and unbiased. For the purposes of this course—and in most standard basic and advanced applications of statistics—an accurate sample estimator is one where the average of the sample values for a statistic equals the population parameter. This principle applies to any estimator. We’ve already discussed how the average of all sample means equals the population mean. Now, let’s explore another statistic, the standard deviation, to deepen your understanding of what it means for an estimator to be unbiased (or accurate). When estimators are biased, statisticians often apply corrections to remove this bias.

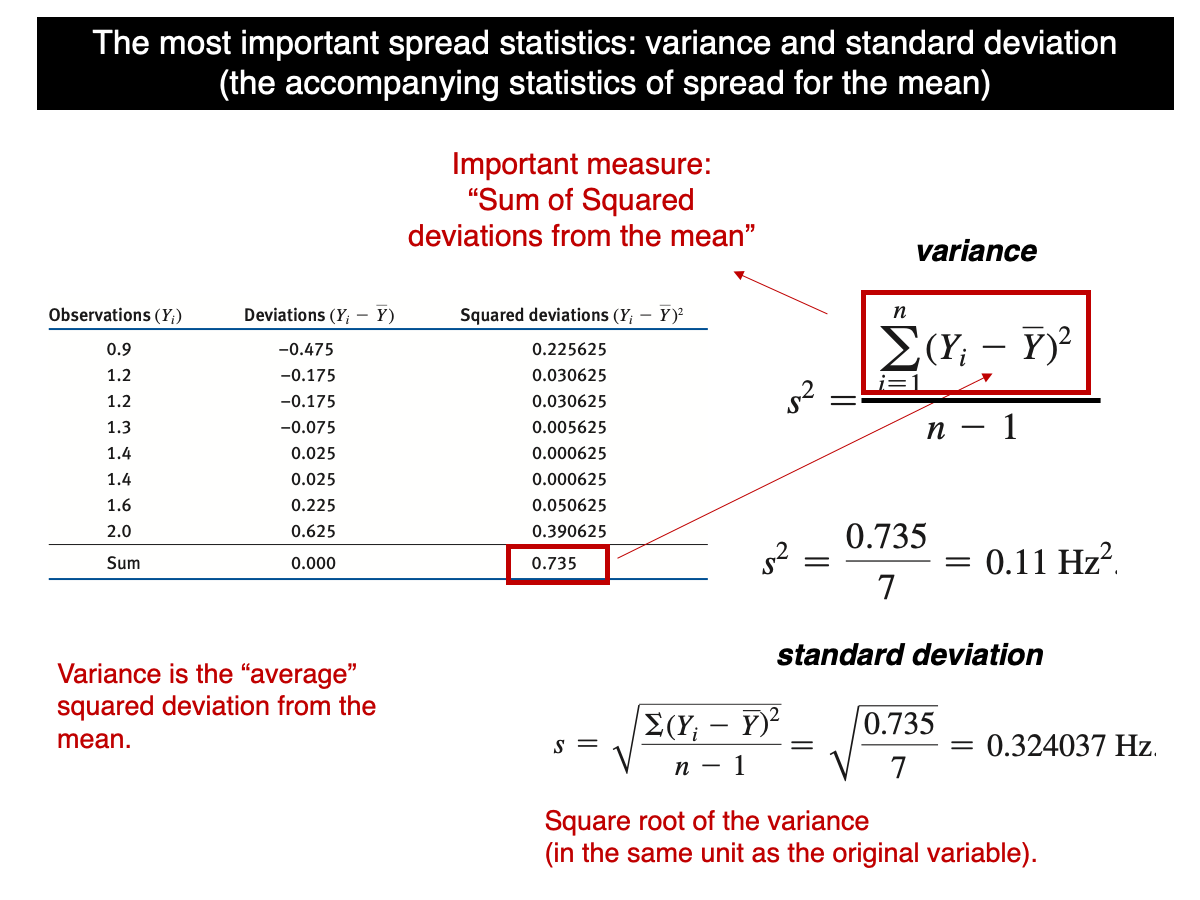

Let’s begin by recalling the formula for standard deviation:

In simple terms, the standard deviation is the square root of the sum of the squared differences (SS) between each value in the sample and the sample mean, divided by n-1. Many students encountering this for the first time often find it odd and wonder why SS isn’t divided by n instead of n-1. The reason lies in a correction called Bessel’s correction (https://en.wikipedia.org/wiki/Bessel%27s_correction), which is necessary to ensure the sample variance is an accurate (unbiased) estimate of the true population variance. Unbiased here means that, under random sampling, all possible sample variances from a population equal the population variance. In other words, in average, the sample variance will be equal to the true population variance and doesn’t tend to be greater or smaller. But that’s only when divided by n-1 as we will demonstrate (computationally) in this tutorial.

Let’s use a computational approach to demonstrate that when variance is calculated using n, it is biased, but when using n-1, it becomes unbiased.

The function ‘var’ in R is already based on n-1. This function could be equally entered “by hand” as follows. Note that the function rnorm considers the standard deviation of the population. So, if we set sd=2, then the variance of the population equals 4.

quick.sample.data <- rnorm(n=100,mean=20,sd=2)

var(quick.sample.data)

var.byHand <- function(x) {sum((x-mean(x))^2/(length(x)-1))}

var.byHand(quick.sample.data)Note that our function by hand and the standard R function var gives exactly the same results. Notice also that, as such, we can use R to program our own functions (i.e., use R as a programming language).

Now let’s make a function in which the standard deviation is calculated using n in the denominator instead of n-1:

var.n <- function(x){sum((x-mean(x))^2)/(length(x))}

var.n(quick.sample.data)Note that the value calculated using n is clearly smaller than the value calculated using n - 1. To understand this in the context of sampling estimation bias, let’s draw 100,000 samples from a normally distributed population with a sample size of n = 20, a population mean of 31, and a variance of 2 (which corresponds to a population standard deviation of 4).

lots.normal.samples.n20 <- replicate(100000, rnorm(n=20,mean=31,sd=2))For each sample, calculate the variance based on n-1 and n:

sample.var.regular <- apply(X=lots.normal.samples.n20,MARGIN=2,FUN=var)

sample.var.n.instead <- apply(X=lots.normal.samples.n20,MARGIN=2,FUN=var.n)Let’s calculate the mean of all sample variances based on n-1 and n.

mean(sample.var.regular)

mean(sample.var.n.instead)Note that the mean based on n-1 is extremely close to 4, i.e., the population parameter (not perfectly because we took 100000 samples instead of infinite). But the mean based on n is much smaller, demonstrating that the correction to make the variance unbiased is necessary due to the issue of degrees of freedom that are covered in lecture 11.

That said, although the variance based on n-1 is not biased, the standard deviation based on n-1 is biased because the expected value (mean of all possible sample standard deviations) is not equal the true population standard deviation. Let’s see this issue in practice:

sample.sd.regular <- apply(X=lots.normal.samples.n20,MARGIN=2,FUN=sd)

mean(sample.sd.regular)The population standard deviation is 2, but the calculated value above is slightly smaller (~1.97), indicating a bias. This bias arises from the square root transformation of the variance, and due to the mathematical complexity involved, we won’t go into too much detail. It is challenging to establish a universally unbiased procedure for the standard deviation, as the correction varies with sample size. Although attempts have been made to address this (which are beyond the scope of our course), the bias for normally distributed populations is typically negligible. As noted in more advanced discussions, the bias ‘has little relevance to applications of statistics since its need is avoided by standard inferential procedures’ that we will cover later in the course: https://en.wikipedia.org/wiki/Unbiased_estimation_of_standard_deviation.

Although the sample standard deviation is slightly biased, it remains useful for most statistical applications (except for some very advanced ones). In Lecture 10, we discussed how the t-distribution, which is used to calculate confidence intervals, is built using the sample standard deviations. This means the bias in sample standard deviations is already accounted for in the t-distribution. It’s also important to note that this bias depends on sample size. When sample sizes are large, the bias becomes negligible, and the t-distribution becomes narrower.

For curiosity’s sake, if the population is normally distributed, the sample standard deviation can be corrected by dividing it by a constant derived from complex statistical theory. However, as mentioned earlier, this correction is generally not applied because it’s unnecessary in most cases. The theory behind calculating this constant is quite advanced and won’t be covered in BIOL322. Still, I believe introducing it to you can help broaden your understanding of the depth and intricacies involved in statistics.

mean(sample.sd.regular/0.986)Note that the correction for n=20 is around 0.986. Once each standard deviation is divided by this constant, the standard deviation is now an accurate (unbiased) estimator of the true population standard deviation. Note how the mean of corrected standard deviations (above) is now is pretty much 2. If infinite samples were taken, than it would have been exactly 2.

The value of a sample estimator depends on the distribution of the population

Unlike the sample mean, which remains unbiased regardless of the shape of the population distribution (whether normal, uniform, asymmetric, bimodal, etc.), it’s not possible to find a universally unbiased estimate of the variance for all types of distributions. Bessel’s correction works well for populations that are normally distributed or close to normal. To illustrate these concepts, let’s use the Human Gene Length data (20,290 genes) as an example. As we’ve discussed in previous lectures, we can treat this gene data as representing the entire population.

Now load the data in R as follows:

humanGeneLengths <- as.matrix(read.csv("chap04e1HumanGeneLengths.csv"))

View(humanGeneLengths)

dim(humanGeneLengths)The mean and variance of the population are:

mean(humanGeneLengths)

var(humanGeneLengths)Look at the distribution of the data and notice how asymmetric the distribution is:

hist(humanGeneLengths,xlim=c(0,20000),breaks=40,col='firebrick')We will use the gene data to demonstrate the importance of the distribution of populations when using sample estimators. In this case we have the entire population, but the principle we will learn here applies to any unknown population which is what we usually have.

Let’s learn first how to take a sample from this population. For that we can use the function sample. The argument used here is size (here set to a sample size of n=100 genes):

geneSample100 <- sample(humanGeneLengths, size = 100)

geneSample100Now let’s assess the statistical properties of sample estimators (mean and variance here) by taking multiple samples (100000) of sample size n=100 from the gene population; and then assess whether the sample mean and the sample variance are unbiased estimators.

geneSample100 <- replicate(100000, sample(humanGeneLengths, size = 100))

dim(geneSample100)Let’s calculate the mean and variance for each sample:

gene.sample.means <- apply(geneSample100,MARGIN=2,FUN=mean)

gene.sample.var <- apply(geneSample100,MARGIN=2,FUN=var)And let’s compare the mean of all sample values with the population parameters:

c(mean(gene.sample.means),mean(humanGeneLengths))

c(mean(gene.sample.var),var(humanGeneLengths))Notice that the difference between the mean of the sample means and the population mean (i.e., all genes) is tiny, whereas the difference for the variance is much larger. As we know, the accuracy of the sample mean does not depend on the distributional properties of the population. In my samples, the difference between the mean of all sample means and the population mean was 2621.334 versus 2622.055—a very small difference of -0.721 (or 0.00027 when expressed as a proportion: 0.721 / 2622.055). This small difference is partly because we did not take all possible samples, instead using 100,000.

However, for the variance, the difference between the mean of all sample variances and the population variance in my 100,000 samples was 4,129,115 versus 4,149,425, a difference of 20,310. This is much larger than the difference for the mean (20,310 / 4,149,425 = 0.004894). Comparing the errors, the variance error is approximately 18 times greater than the error for the mean (0.004894 / 0.00027 = 18.13). Therefore, the sample variance is a biased (inaccurate) estimator for this asymmetric population.

However, remember that the variance is an unbiased estimator for normally distributed populations, as we discussed earlier in this tutorial.

As such, we can’t trust the sample variance as an estimator for the population variance for populations that are far from normally distributed.

Transformations can often remove the bias in sample estimates

Data transformation is commonly used to address statistical biases, including those that arise when the distribution of the population from which we sample is not normal. Let’s apply a log transformation here to see how it improves the estimation of the population variance for the gene data.

Let’s start by observing how the log-transformation affect the distribution of the population:

log.Genes <- log(humanGeneLengths)

hist(log.Genes,breaks=40,col='firebrick')Note how the distribution is much more symmetric after being log-transformed. The mean and variance of the population log-transformed are:

mean(log.Genes)

var(log.Genes)Let’s repeat the same process as before but we now log-transform the sample.

geneSample100 <- replicate(100000, sample(humanGeneLengths, size = 100))

gene.sample.means.log <- apply(log(geneSample100),MARGIN=2,FUN=mean)

gene.sample.var.log <- apply(log(geneSample100),MARGIN=2,FUN=var)And let’s compare the mean of all sample values with the population parameters log-transformed:

c(mean(gene.sample.means.log),mean(log(humanGeneLengths)))

c(mean(gene.sample.var.log),var(log(humanGeneLengths)))Notice how similar the estimates are now after applying the log transformation. Using transformations like the log or square-root (among others) is a common technique to improve the accuracy of sample estimators.

Using the t-distribution to calculate confidence intervals

Let’s use a data set containing the span of the eyes (in millimeters) of nine male individuals of the stalk-eyed fly.

Download the stalk-eyed fly data file

Upload and inspect the data:

stalkie <- read.csv("chap11e2Stalkies.csv")

View(stalkie)Let’s plot the histogram of the data. As you’ll see, the data appear relatively symmetric. This suggests that the distribution is approximately normal, allowing us to use the sample standard deviation as an estimate of the true population standard deviation. As we discussed in Lecture 10, confidence intervals are calculated using the t-distribution.

We’ll see later in the course how to assess the assumption of normality in a more rigorous way.

hist(stalkie$eyespan, col = "firebrick", las = 1,

xlab = "Eye span (mm)", ylab = "Frequency", main = "")Mean and standard deviation for the data:

mean(stalkie$eyespan)

sd(stalkie$eyespan)Using the t-distribution, the 95% confidence interval for the population mean is:

t.test(stalkie$eyespan, conf.level = 0.95)$conf.intThe lower and upper limits of the interval appear in the first row of the output, i.e., the 95% confidence interval for the population mean is 8.471616 |—-| 9.083940

The 99% confidence interval is:

t.test(stalkie$eyespan, conf.level = 0.99)$conf.intDemonstrating that confidence intervals based on the sample standard deviation (even though biased due to the square root used as seen above) and the t-distribution work for samples from normally distributed populations

Let’s use the stalk-eyed fly data for inspiration and draw samples from a normally distributed population that has the mean and standard deviation as those for the stalk-eyed fly data. Sample size will be the number of flies (observational units) is the stalk-eyed fly data (i.e., 9 individuals).

lots.normal.samples.flies <- replicate(100000, rnorm(n=9,mean=8.78,sd=0.398))Let’s build the confidence interval for each sample. For this, we need to build a small function that calculates for us CI:

Conf.Int <- function(x) {as.matrix(t.test(x,conf.level = 0.95)$conf.int)}Apply to the original stalk-eyed fly data which shows the same results as before but our custom function gives only the lower and upper limits for the confidence interval.

Conf.Int(stalkie$eyespan)Not let’s calculate the confidence interval for each of the 100000 samples we generated just above. This will take a little while (1 or so minutes depending on the computer):

conf.intervals <- apply(lots.normal.samples.flies,MARGIN=2,FUN=Conf.Int)

dim(conf.intervals)conf.intervals is a matrix of 2 rows and 100000 columns. Each row has the confidence interval for a sample (out of 100000).

The confidence interval for the first and last sample are:

conf.intervals[,1]

conf.intervals[,100000]Let’s put the left and right side in different vectors:

Left.value <- conf.intervals[1,]

Right.value <- conf.intervals[2,]What is the percentage of confidence intervals out of 100000 intervals that contain the true population parameter set in the way we generated samples (i.e., 8.78 mm)? This can be calculated as:

length(which(cbind(Left.value <= 8.78 & Right.value >= 8.78)==TRUE))/100000 * 100Nearly 95% of the sample-based confidence intervals contain the true population parameter, right? If we had drawn an infinite number of samples, it would have been exactly 95%. This shows that even though the confidence interval is based on the sample standard deviation, which is a biased estimator of the true population standard deviation, the confidence interval still behaves as expected. This reinforces the point we made earlier: ’Even though the sample standard deviation is biased (due to the square root transformation), it remains reliable and useful for most statistical applications.

Demonstrating that the confidence intervals based on the sample standard deviation do not work as well for samples from non-normally distributed populations

We previously demonstrated that the sample standard deviation is biased, but for normally distributed populations, this bias does not impact the accuracy of confidence intervals for the mean. In fact, 95% of the intervals still contain the true population mean because the t-distribution was designed to account for this bias. However, it’s important to remember that the t-distribution assumes sampling from a normally distributed population. Since confidence intervals for the population mean are based on the sample standard deviation, what happens when the population is not normally distributed?

mean.pop.genes <- mean(humanGeneLengths)

mean.pop.genes

geneSample30 <- replicate(100000, sample(humanGeneLengths, size = 30))

conf.intervals.genes <- apply(geneSample30,MARGIN=2,FUN=Conf.Int)

Left.value <- conf.intervals.genes[1,]

Right.value <- conf.intervals.genes[2,]

length(which(cbind(Left.value <= 2622.027 & Right.value >= 2622.027)==TRUE))/100000 * 100Note that this value is around 93% and not very close to the expected 95%. So, in this case the t-distribution, which was based incorporating the bias in the sample standard deviation, does not hold really well for a population that is not normally distributed.

Report instructions

This report addresses the following question: What happens when we log-transform the sample values? Do confidence intervals perform better or worse than expected? To answer this, you will need to adapt the code learned in this tutorial using the Human Gene data set. Submit the code and be sure to include a written explanation of your findings.

# use as many lines as necessary,

# comments always starting with #We have extensive experience with tutorials, and they are structured to ensure that students can complete them by the end of the session. Please submit both the RStudio file through Moodle. A written report (e.g., a Word document) is not required (just the R file with your code as explained above).